Architecture

Building a self-hosted BaaS requires solving deployment complexity that would typically require a DevOps team. Pendulum's architecture automates everything from Docker orchestration locally to multi-service AWS deployments in production.

Local Development Experience

Developers expect their local environment to be “turnkey ready” and do not want to waste time wrestling with service dependencies, port conflicts, or MongoDB installations.

Pendulum's local architecture consists of three containerized services orchestrated with Docker Compose and connected via a bridge network:

- MongoDB with persistent volumes, automated initialization, and container health monitoring

- App service handling CRUD operations, serving the Admin Dashboard, and managing inter-service communication

- Events service maintaining persistent SSE connections and coordinating real-time event distribution

Pendulum spins up all three services through a single command, npx pendulum dev.

This containerized approach eliminates developer environment setup but introduces Docker as a dependency. While containers add memory overhead compared to native processes, the alternative would require developers to manually install MongoDB, manage Node.js versions, and handle service startup ordering. These isolated containers mirror our production architecture, ensuring alignment between the two environments and eliminating the all-too-common issue of “works on my machine”.

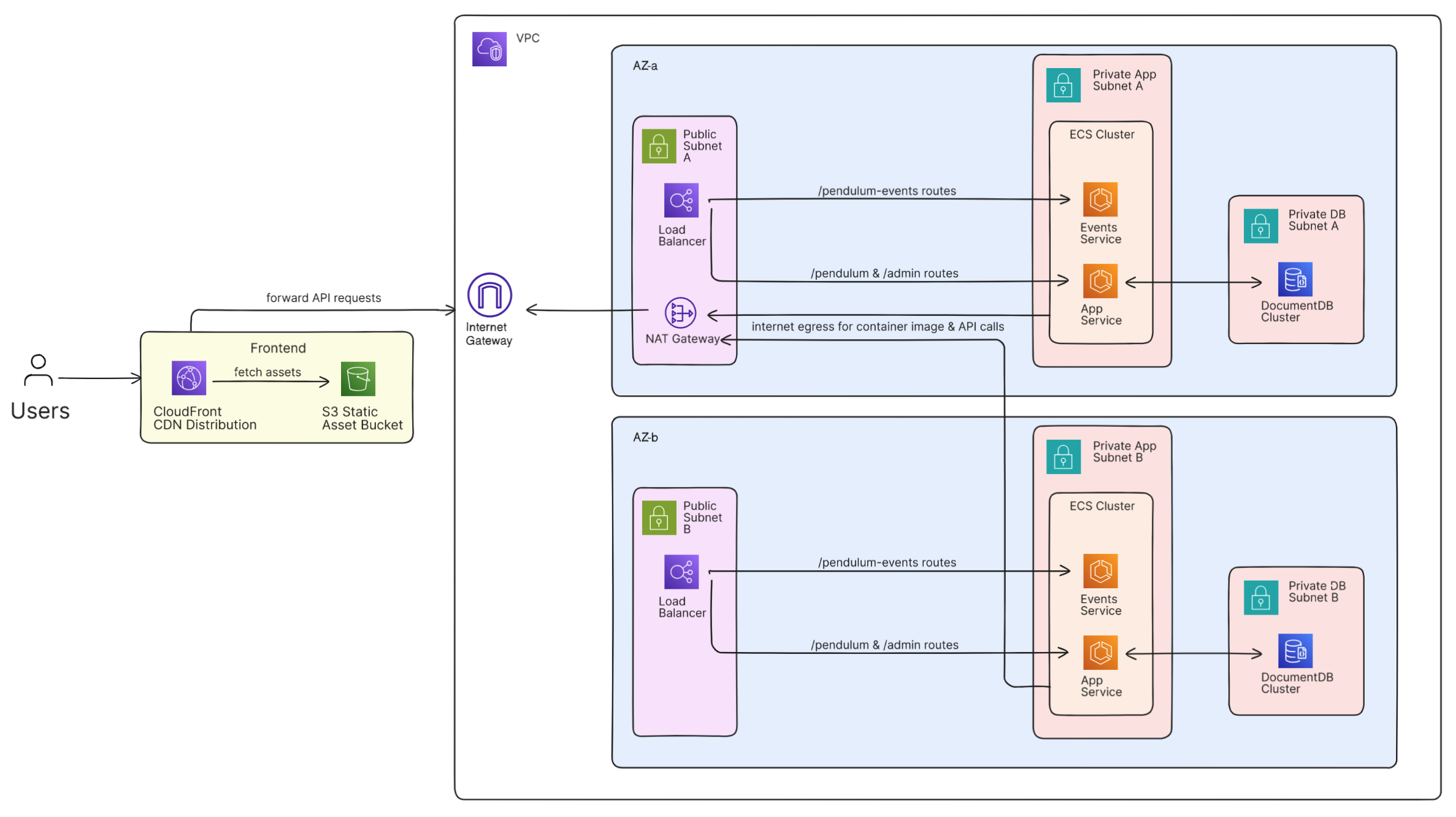

Production Architecture

Production systems require load balancing, auto-scaling, managed databases,

security groups, and monitoring — complexity that can overwhelm developers.

Pendulum eliminates this friction through Infrastructure-as-Code automation

using AWS CDK, where a single npx pendulum deploy command provisions

complete production infrastructure in an isolated VPC.

Cloud Provider Choice

Building a BaaS platform with automated deployment leads to a fundamental choice: take the breadth-first approach of supporting multiple cloud providers or focus deeply on one and provide the best developer experience possible. Supporting multiple cloud providers offers vendor flexibility but significantly increases development complexity and maintenance overhead.

We chose an AWS-first strategy to prioritize developer experience over provider flexibility. AWS offers a robust ecosystem of managed services, extensive documentation, and the most widespread adoption of any cloud provider. We accepted the tradeoff of vendor lock-in for production deployments, but our open source codebase enables users to adapt Pendulum for other providers if needed. Single-command deployments deliver more value than multi-cloud flexibility for developers focused on building frontend applications.

Container Orchestration

As we discussed in reviewing our development architecture, we took the same containerized service approach for production. To handle container orchestration for scaling, networking, and deployment management, we evaluated three primary options:

Elastic Kubernetes Service (EKS) offers advanced features like custom resource definitions, advanced networking policies, and fine-grained resource management. However, it requires significant operational expertise, complex YAML configurations, and ongoing cluster management that conflicts with our zero-operations philosophy.

EC2 instances offer complete control over the runtime environment, allowing custom optimizations and direct server access. But this approach requires manual scaling, security patching, and infrastructure management that works against our goal of simple, automated deployment.

Elastic Container Services (ECS) with Fargate delivers serverless containers with automatic scaling, integrated AWS networking, and zero server management. While it sacrifices some flexibility and creates AWS vendor dependency, it aligns perfectly with our goal of eliminating operational overhead for developers.

| EKS (Kubernetes) | EC2 Instances | ECS with Fargate |

|---|---|---|

| High complexity — YAML configs, cluster management | High complexity — manual scaling, patching, monitoring | Low complexity — zero server management |

| Advanced auto-scaling with custom metrics | Manual or basic auto-scaling groups | Automatic scaling based on CPU/memory |

| Portable across cloud providers | Portable but requires migration effort | AWS-specific service |

| Complex RBAC, network policies, secrets | Manual security patching and hardening | AWS-managed security, automatic patching |

We chose Fargate to provide a simple and scalable production architecture that requires little to no management from our end users. Both app and events services can scale independently, while AWS handles underlying infrastructure provisioning and management.

Database Selection

With MongoDB as the database for Pendulum, we could either choose to self-manage MongoDB on EC2 instances or go for the managed AWS DocumentDB service. DocumentDB integrates seamlessly with our existing MongoDB database operations, and offers managed scaling. On top of that, it offers automatic backups, replication across multiple Availability Zones, integrated security groups, and CloudWatch monitoring — features that would be difficult to replicate with a self-managed EC2 instance.

DocumentDB doesn't support all MongoDB features and creates further AWS dependency, but self-managing MongoDB would require expertise in replica set management, backup strategies, security hardening, and 24/7 monitoring - operational complexity that conflicts with our zero-maintenance philosophy.

| Self-Managed MongoDB on EC2 | AWS DocumentDB |

|---|---|

| High operational overhead — manual installation, configuration, updates | Zero operational overhead — fully managed service |

| Manual scaling — resize instances, configure sharding | Automatic scaling — scale compute and storage independently |

| Manual backup and recovery, configure backup scripts, test restoration | Automatic backups, point-in-time recovery |

| 100% full MongoDB feature set | ~95% MongoDB compatibility, missing some advanced features |

Load Balancing and Traffic Management

Our microservices architecture creates routing complexity — frontend requests need to reach the right service, services need to find each other when scaling, and developers expect a single endpoint for their SDK. AWS’ Cloud Map lets us implement service discovery so that our backend services can communicate using logical names rather than hardcoded endpoints when containers scale.

For distributing frontend traffic, we used a two-tier approach: CloudFront

handles SSL termination and global CDN delivery, forwarding to an

Application Load Balancer (ALB) for service-specific routing. This lets

developers use the SDK without additional configuration while we handle

routing /api requests to the app service and /events to the events service automatically.

This architecture creates a unified endpoint experience — CloudFront distribution behaviors enable the frontend SDK to seamlessly connect to appropriate backend services without additional developer configuration. Frontend applications deploy automatically to S3 with CloudFront distribution for global content delivery, while the ALB focuses on application-level routing and health checks.

This two-tier approach adds infrastructure complexity and potential latency compared to direct service access, but eliminates the need for developers to manage multiple endpoints or handle service discovery in their frontend code.